The Transformer Bubble

Real AI won’t run on the exponentially big and dumb transformers that power today’s LLMs

The “AI bubble” chatter somehow keeps overlooking the most significant miscalculation of all. We’re in a transformer bubble — not an AI bubble or even an LLM bubble.

AI is every bit as disruptive as folks claim. But real AI won’t run on the exponentially expensive transformers that power today’s big-and-dumb LLMs.

The mistake is talking about AI as if AI were constrained only to today’s transformer based Large Language Models. That’s like talking about physics as if physics were contrained only to Newtonian models — while naively refusing to contemplate the possibility of nuclear physics, relativity, or quantum theory.

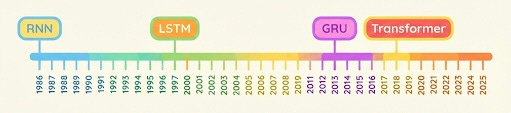

For newcomers to AI: transformers are just the latest iteration of a long line of architectures for language models. They only replaced GRUs about seven years ago, which only replaced LSTMs five years before that, which replaced many other previous iterations of RNNs and other neural networks — and transformers too will be replaced before long.

Transformers are oversimplistic, like Newtonian models. They’re dumb. As Raising AI points out, even primitive transformer-based LLMs like GPT 2.0 had to be trained on trillions of words of English — and yet they still made mistakes 4-year-olds laughed at.

In contrast, those 4-year-olds only needed to be trained on about 15 million words of English spoken to them — over their entire lifetime.

GPT 2.0 was making those laughable mistakes even after training on the square of the amount of data the 4-year-olds needed. That’s not just a factor of 10x, or even 1000x. Transformer based LLMs need an exponential amount of training and data compared to those 4-year-olds.

Not the brightest bulb in the class.

The Transformer Boba

The way transformers learn is more like my adorable shih-tzu, Boba, than human toddlers. I’ve tried a million times to reason with Boba, explaining to her big lovely eyes why she shouldn’t poop on the floor.

Despite the full range of human emotions that Boba possesses, her dog brain just isn’t wired to understand the language that makes logical reasoning possible in human brains. Boba’s neural circuitry supports what in cognitive psychology is called System 1: automatic, intuitive, fast, heuristic mental processes below the level of conscious awareness.

Instead, we teach Boba through Pavlovian conditioning. When she poops on the floor, she gets negative reinforcement in the form of a mild “bad girl!” scolding. When she uses her toilet pad, she gets positive reinforcement in the form of an approving “good girl!” with a treat.

Transformers are more like Boba brains than toddler brains. Transformers are a kind of neural network that operate like a kind of Artificial System 1: automatic, intuitive, fast, heuristic mental processes below the level of conscious awareness. Like Boba, transformers have to be trained through positive and negative reinforcement.

Training Boba certainly seems to take an exponential number of trials. The same goes for transformers. But human toddlers are able to understand simple conscious reasoning when you teach them — which helps them learn much faster.

The irrational amount of investment into exponentially large data centers (along with energy production) arises from a false premise — that AI will need to be trained the exponentially laborious way we teach Boba through positive and negative reinforcement — instead of the way human toddlers learn.

But that kind of AI isn’t artificial intelligence — it’s artificial idiocy.

AI is moving from Boba brains to toddler brains

Like humans — even toddlers — true AI will need to augment System 1 mental processes with System 2: consciously controlled, rational, slower, explicit mental processes. This will allow AIs to catch themselves when their intuition starts hallucinating, and to monitor their own unconscious biases — just as we humans do.

And this will lead to true AIs that learn exponentially faster — just like toddlers do.

True AIs with both System 1 and System 2 will be coming fast in the next year or two. The “reasoning AIs” of the past year — ChatGPT o1, o3, o4, 5, or DeepSeek R1, R2, R3, etc. — are just the clunkiest tip of the iceberg. We will see much more efficient competitors, ranging from enhanced descendants of chain-of-thought models to hybrid neurosymbolic architectures.

All the talk of compute scaling and data scaling is dangerously naive. By far the largest scaling will come instead from algorithmic scaling. Hugging Face’s CEO, Clem Delangue, has argued we’re in an LLM bubble but even that’s not quite it. Language models are incredibly useful components for AI systems, and they’re here to stay. They just need to be powered by neural architectures that are less Boba brains and more toddler brains.

We’ve moved from “data is the new oil” to “data centers are the new refineries”. But when all the cars become electric, who will need either? What will happen to the massive infrastructure investments to power artificial idiocy?

When the transformer bubble pops, those investors will rue their failure to note that human brains only require 20W of power.

De Kai, I appreciate reading this after our conversations at Georgetown.

Question: any projects I should be looking at for hybrid neurosymbolic architectures?